2 Recommended resources

2.1 Resources for handling big data in R

Notes:

Medium sized datasets (< 2 GB):loaded in R ( within memory limit but processing is cumbersome (typically in the 1-2 GB range )

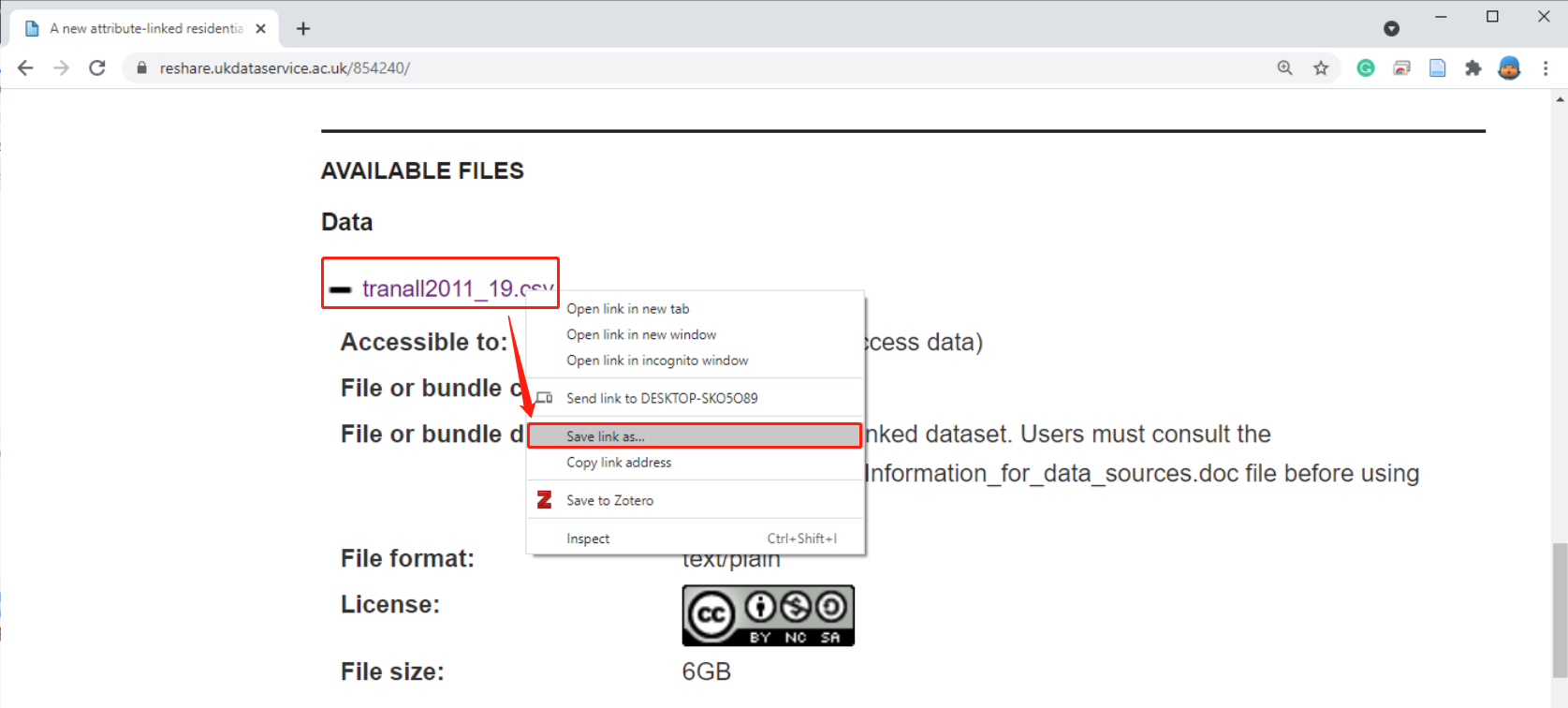

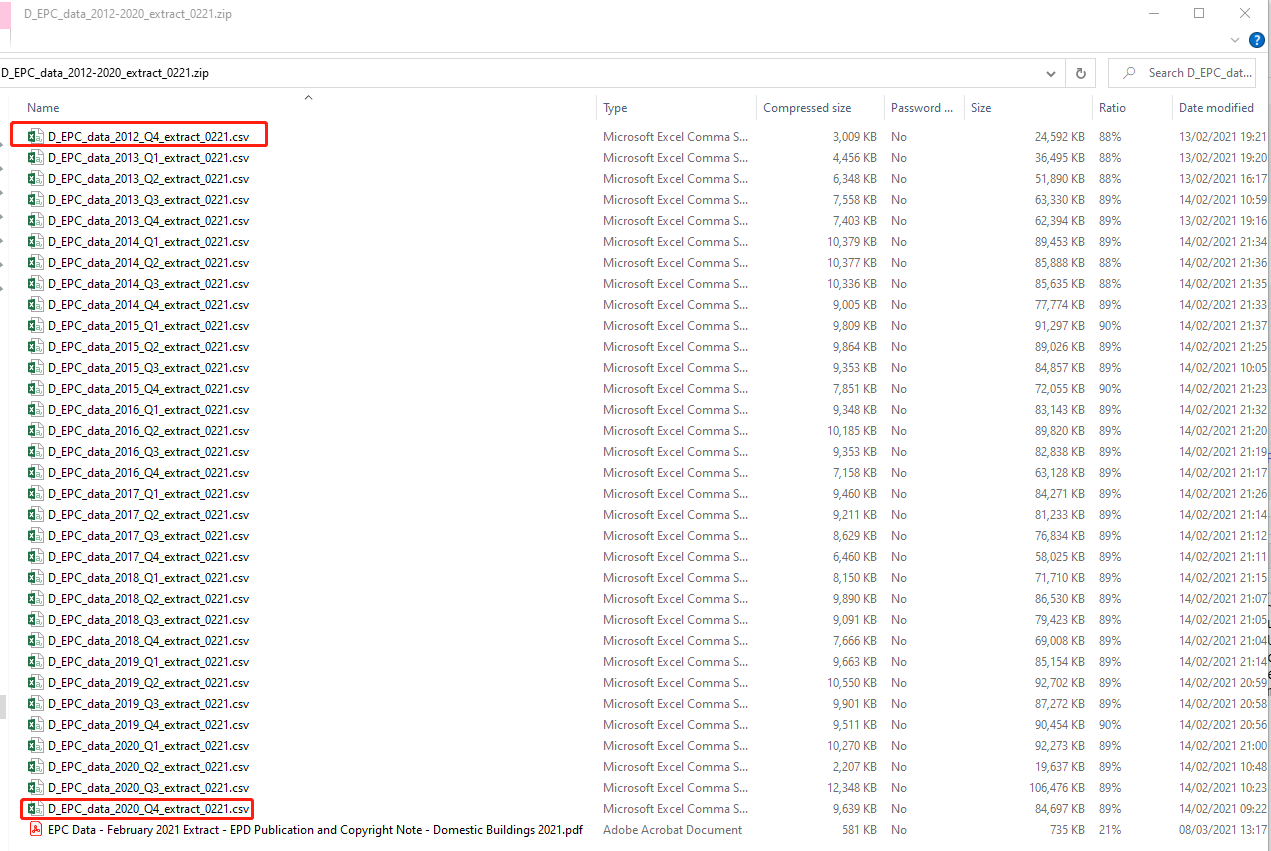

Large files that cannot be loaded in R due to R / OS limitations

Large files(2 - 10 GB):process locally using some work around solutions

Very Large files( > 10 GB):needs distributed large scale computing.

Notes:

Rule of thumb:

Data sets that contain up to one million records can easily processed with standard R.

Data sets with about one million to one billion records can also be processed in R, but need some additional effort.

Data sets that contain more than one billion records need to be analyzed by map reduce algorithms.

For large tables in R dplyr’s function inner_join() is much faster than merge()

BASE R, THE TIDYVERSE, AND DATA.TABLE: A COMPARISON OF R DIALECTS TO WRANGLE YOUR DATA

dplyr backends: multidplyr 0.1.0, dtplyr 1.1.0, dbplyr 2.1.0

rbindlist() is the fastest method and rbind() is the slowest. bind_rows() is half as fast as rbindlist()

2.2 Resources for the data.table package

2.3 Resources for measuring R performance

2.4 Resources for PostGIS database

PostGIS is an optional extension that must be enabled in each database you want to use it in before you can use it.